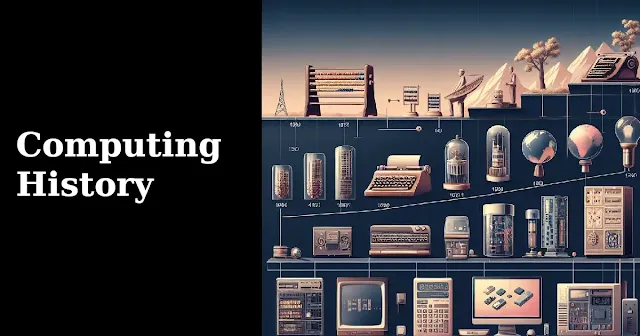

Cryptobit 1111 Technology - Join us on a journey through the origins of innovation, exploring the fascinating history of computing. From abaci to quantum marvels, discover the stories behind our digital world.

|

| Computing History |

Let's take a moment to imagine a world where every time we press a key on our keyboards, instead of seeing letters on a screen, we hear the sound of the mechanical gears of Charles Babbage's Analytical Engine. It's fascinating to think that once upon a time, Alan Turing's visionary Turing Machine was the epitome of a universal machine long before sleek laptops existed. The journey from the abacus to quantum computers is a remarkable testament to human ingenuity - a story worth exploring.

In this article, we delve into the origins of innovation and uncover the story behind the devices we use today. The emergence of transistors, integrated circuits, and personal computers was not an overnight phenomenon. Instead, they resulted from human curiosity and perseverance and evolved through the hard work of early computing pioneers. Join us as we peel back the layers of history and explore this fascinating journey.

Understanding evolution is not a mere stroll down memory lane; it's a voyage into the core of our daily lives. The smartphones we navigate, the laptops we type on, and the networks that connect us—all inherit a legacy molded by the brilliance of minds like Turing, Babbage, and Lovelace.

So, join us in this journey through the history of computing. It's not just a chronological tale; it's a narrative that unfolds the essence of innovation, revealing how the threads of the past are intricately woven into the very fabric of our technological present. As we traverse the milestones, anticipate not just nostalgia but a profound realization—the devices we wield are not just tools; they are the manifestations of centuries of human curiosity, resilience, and an unyielding pursuit of progress. The digital era awaits our exploration, and the historical backdrop beckons us to understand where we are and how we arrived here. Let the unraveling begin.

Computing Through Time

Early Computing

Once upon a time, in the annals of human history, when the world was adorned with the simplicity of ancient wisdom, an ingenious device emerged—the abacus. Picture yourself in the bustling marketplaces of ancient civilizations, where merchants skillfully manipulated beads on a frame, performing calculations that would shape the foundations of arithmetic.

As the abacus facilitated the art of counting, a whisper of innovation echoed through time, leading us to the mechanical marvels of visionaries like Blaise Pascal and Gottfried Wilhelm Leibniz. In the 17th century, Pascal's Pascaline graced the world—an intricate contraption of gears and dials designed to alleviate the burden of numerical calculations.

Yet, Leibniz's reckoning began the journey into the heart of early computing. Imagine the crisp sound of each step, a mechanical dance of precision, as Leibniz sought to automate complex mathematical operations. These early mechanical calculators were the gentle murmurs of an impending revolution.

The 19th Century

Now, let us fast forward to the 19th century—when dreams of machines that could think danced in the minds of visionaries. Among them was Charles Babbage, an English mathematician and inventor whose vision transcended the limitations of his era. Imagine the clinking of cogs and gears as Babbage conceived the Analytical Engine, a mechanical wonder intended to perform any mathematical operation.

Alas, the Analytical Engine remained a dream deferred, a symphony of gears waiting to be played. But fear not, for Babbage's legacy found an unexpected companion in Ada Lovelace. Picture Lovelace, the enchantress of numbers, translating the Analytical Engine's potential into the world's first computer program. Her notes breathed life into Babbage's mechanical musings like a magical incantation.

As we bid adieu to this chapter of our tale, the stage is set for the next act—the advent of electronic computing. The abacus, Pascal's gears, Babbage's dreams, and Lovelace's insights have woven a tapestry of innovation. The curtain rises on a new era, where electrons dance, circuits hum, and the story of computing continues to unfold.

Babbage and Lovelace

In the heart of the 19th century, where the echoes of progress harmonized with the clinking of gears, there stood a visionary mathematician and inventor—Charles Babbage. Picture a world brimming with possibilities as Babbage conceived the extraordinary Analytical Engine, a mechanical marvel designed to perform complex calculations with precision is possible.

As the story unfolds, imagine yourself in Charles Babbage's workshop, where metal and mechanics combine to create the analytical engine. The mesmerizing rhythm of gears turning in perfect unison is a symphony of precision-guided by Babbage's brilliant design. However, fate had a strange twist, and the Analytical Engine remained a magnificent invention never wholly realized during its time.

Enter Ada Lovelace, a luminary fueled by passion for numbers and imagination. In the dance of gears and dreams, Lovelace emerged as the enchantress of the Analytical Engine. Picture her at the crossroads of mathematics and creativity as she translated Babbage's vision into an enchanting sequence of instructions—the world's first computer program.

Lovelace's notes, a testament to the marriage of art and science, unfolded like a magical incantation breathing life into the Analytical Engine's dormant potential. In the quiet corridors of history, Ada Lovelace became the world's first computer programmer, her legacy resonating through time as a beacon of innovation.

Beyond Time

Babbage and Lovelace worked together to create a story that went beyond their time and paved the way for today's technology. Their brilliance was a combination of mechanical ingenuity and artistic imagination, and it is considered a significant moment in the history of computing.

As we depart from this tale, the legacy of Babbage and Lovelace lingers—a gift woven with the threads of innovation, where dreams of machines that could think found their first whispers. The story of computing unfolds further, beckoning us to explore the realms of electronic marvels and theoretical wonders. Stay tuned for the next chapter, where electrons dance and the computing symphony continues.

ENIAC, Colossus, and Codebreaking

In the mid-20th century, the world was at war, and the sound of innovation was becoming louder. Our story takes place during a transformative era of electronic computing and the clatter of machinery that would shape history.

ENIAC: The Birth of Electronic Computation

Imagine a room filled with towering machines, their electronic pulses echoing through the corridors of progress. The Electronic Numerical Integrator and Computer (ENIAC) emerges in this realm—a colossal creation designed to tackle complex numerical calculations. Picture the intricate dance of vacuum tubes and the hum of electronic circuits as ENIAC, the world's first general-purpose electronic digital computer, comes to life.

As the war-torn world sought solutions, ENIAC stood as a beacon of hope—a machine capable of rapid calculations that could aid in crucial wartime efforts. Its advent marked a pivotal moment, ushering in the age of electronic computation and setting the stage for a digital revolution.

Colossus: Deciphering the Secrets of War

Venture across the English Channel to Bletchley Park, where soldiers on the front lines and the minds behind the scenes turned the tides of war. Enter Colossus, a codebreaking marvel born from the brilliance of British engineer Tommy Flowers.

Picture the urgency of wartime, where encrypted messages held the key to victory. Colossus became the world's first programmable digital electronic computer with its array of vacuum tubes and a symphony of electronic pulses. Its mission is to decipher the encrypted messages of the Axis powers, turning the tide of war through technological ingenuity.

As the war concluded, the secrets of Colossus remained veiled for decades, a testament to the silent heroes of codebreaking whose innovations paved the way for the electronic age.

Our tale of early electronic computers pauses here amidst the hum of vacuum tubes and the echoes of wartime codebreaking. The journey continues, inviting us to explore the theoretical wonders of Alan Turing's Turing Machine. Stay tuned as we unravel the threads of innovation and witness the symphony of electronic computation evolving.

The Turing Machine

Our narrative ventures into the mid-20th century, where the stage is set for a theoretical maestro—Alan Turing. Picture a world awakening from the shadows of war, craving the promise of progress and the dawn of a new era.

Alan Turing's Theoretical Contributions

In the quiet corridors of academia, Alan Turing emerges as a prodigy—an unconventional thinker fueled by a profound understanding of logic and computation. Envision the theoretical realm he inhabits, where the concept of a universal machine crystallizes into what we now know as the Turing Machine.

As our story unfolds, see Turing's theoretical machine—a simple yet powerful device capable of executing any algorithm. The rhythmic dance of its tape, the intricate interplay of states, marks the birth of theoretical computer science. Turing's visionary concept transcends the physical limitations of machinery, laying the theoretical groundwork for the computers we use today.

Turing's Role in Codebreaking

But Turing's brilliance extends beyond theory, resonating with the practical demands of his time. Picture the World War II landscape, where the Enigma cipher guarded the secrets of the Axis powers. With his keen intellect, Turing leads the charge at Bletchley Park—a clandestine sanctuary of codebreakers.

Envision the relentless pursuit of deciphering the Enigma code, an insurmountable task. Yet, like a cryptographic symphony, Turing's mind orchestrates the solution. With the aid of the bombe machine and the brilliance of his team, the Enigma code is cracked, unlocking vital intelligence and altering the course of history.

Alan Turing, the theoretical luminary and wartime codebreaker, leaves an indelible mark on the tapestry of computing. His legacy reverberates, guiding us through the evolution of electronic computation and inspiring future generations.

Turing Machine is a theoretical construct and a bridge between logic and application. The saga of computing unfolds further, inviting us to explore the electronic wonders that emerged from Turing's visionary contributions. Stay tuned as we traverse the landscape of post-war innovations and witness the dawn of the digital age.

Beyond the Turing Era: Transistors, Integrated Circuits, and Personal Computers

Our journey through the evolution of computing continues, transitioning from the theoretical brilliance of Alan Turing to the tangible innovations that followed in the wake of his groundbreaking contributions.

Transistors and the Miniaturization Revolution

Imagine a world awakening from the theoretical dreamscapes of the Turing Machine, eager for practical applications. The 1950s and 1960s witnessed the advent of the transistor—an electronic marvel that revolutionized the computing landscape. Picture the transition from bulky vacuum tubes to these miniature semiconductor devices, marking the era of miniaturization.

As transistors usher in a new age, envision the once massive electronic machines shrinking in size. Once echoing in cavernous rooms, the electronic pulses hum within compact devices. This miniaturization paves the way for faster, more efficient, and portable computing.

Integrated Circuits: The Birth of Microelectronics

Step further into the 1960s, when the integration of transistors reached new heights with the invention of integrated circuits. Picture the intricate pathways etched onto a silicon wafer, housing multiple transistors and electronic components. The birth of microelectronics unfolds, allowing for the creation of robust computing systems on a microscopic scale.

The microchip, a testament to human ingenuity, has become the cornerstone of modern computing devices. The evolution from discrete components to integrated circuits accelerates the pace of technological progress, shaping the interconnected world we know today.

Personal Computers: Empowering Individuals

Now, imagine the late 20th century—when the dream of computing extends beyond academic institutions and research facilities. Visualize the emergence of personal computers, such as the iconic Apple II and IBM PC. These devices, once a rarity, now find their way into homes, offices, and the hands of individuals.

Picture the click-clack of keyboards, the hum of disk drives, and the glow of cathode-ray tube monitors. Personal computers empower users with the ability to navigate the digital realm, fostering a new era of accessibility and creativity. The once-exclusive computing domain has become a tool for individuals to explore, learn, and innovate.

The landscape of computing transforms from theoretical musings to tangible advancements. The story of innovation echoes in the halls of progress, inviting us to delve into the following chapters—the rise of the internet, the era of digital connectivity, and the contemporary tapestry of technological wonders. Stay tuned as we unravel the threads of electronic symphonies and witness the dawn of the digital age.

Takeaway

As we conclude this chapter in the history of computing, we invite you to share your thoughts. What aspect fascinated you the most? Feel free to comment below and stay tuned for our next exploration into the wonders of technology!

DIY Education, stay curious, stay inspired, and keep exploring.